For years, high-end visual effects were something you only saw in Marvel films and perfume ads. Today, the same kind of camera moves, character animation, and quick-fire editing tricks are quietly slipping into TikToks, YouTube shorts and brand promos made in a bedroom.

One of the engines behind this shift is a new wave of AI video models. Among them, Hailuo 2.3 has been getting attention for its surprisingly polished motion, lifelike faces and sensible pricing – a combination that’s turning more casual creators into confident video directors.

But tools are only half the story. The real question is what people are actually doing with them – and how platforms that offer smart, controlled editing features are helping keep this new power on the right side of creativity and ethics.

What makes Hailuo 2.3 different?

Hailuo started life as a video model family focused on realistic physics and consistent characters, aiming to stay out of the “uncanny valley” that plagued early AI video experiments.

The 2.3 generation builds on that in a few key ways:

- Smoother motion and complex choreography

Hailuo 2.3 is unusually good at dynamic motion – think K-pop dance routines, sports clips, or fashion walks where limbs and clothing need to move in a believable way. - Sharper, more expressive faces

It pays a lot of attention to micro-expressions, so subtle changes in eyes and mouth feel more natural instead of plastic or frozen. - Native 1080p “Pro” output and a faster variant

The Pro tier delivers 1080p image-to-video, while the 2.3-Fast option trades a little detail for much quicker turnaround and lower cost – useful if you’re testing many versions of a clip. - Cost-effectiveness for batch content

MiniMax, the company behind Hailuo, markets 2.3 as “more performance for the same price”, with the Fast version reportedly cutting batch-creation costs by up to half compared with previous models.

In other words, it’s not just a party trick; it’s built to sit inside real content workflows where budgets, timelines and consistency all matter.

How creators actually use Hailuo 2.3

Behind the marketing language, most day-to-day uses fall into a few very practical buckets:

None of this replaces traditional filming entirely. What it does is compress the distance between an idea and something you can share, which is why these models have taken off among solo creators and small teams.

Face swapping, identity and creative control

One of the more controversial – but also hugely popular – use cases in this new landscape is face replacement. On the harmless end, people drop their faces into film scenes, ads or dance routines for fun. On the serious end, studios use controlled face replacement for continuity, stunt doubles and localisation.

Tools built around face swapping in existing footage give users a lot of control over this process. Platforms such as GoEnhance AI wrap these capabilities in more traditional editing features: upscaling, colour tweaks, background clean-up and so on. That makes it possible to combine generated shots from Hailuo 2.3 with live-action clips while keeping everything stylistically coherent.

When you move from generating a full video to applying a targeted edit, that control really matters. A focused tool for face change in video can, for instance, enforce stricter rules on upload quality, face clarity and output length – all of which reduce the risk of obviously abusive or misleading clips slipping through unnoticed.

The legal and ethical question you can’t ignore

As you’d expect, this new creative freedom comes with flash-points. In 2025, a group of major Hollywood studios – including Disney and Warner Bros Discovery – sued MiniMax, alleging that its Hailuo service had been promoted using copyrighted characters without permission.

The case is still moving through the courts, but the message is already loud and clear:

- Training and promotion matter.

How these systems are trained, and how they are advertised to users, is now firmly in the sights of regulators and rights-holders. - User prompts are not a free pass.

Just because a model can generate a character or logo doesn’t mean you are legally safe to use it in a commercial context. - Platforms are expected to build guardrails.

Studios are explicitly asking providers to block obvious infringements – for example, preventing users from generating certain protected characters or mimicking very distinctive brand assets.

For individual creators, the practical takeaway is simple: treat AI-generated footage like any other media asset. If you wouldn’t use a clip you found randomly on the internet without checking rights, don’t assume AI content is magically exempt.

Preserving the Human Touch in an AI-Powered Process

There’s also a cultural question hovering over all of this: if models like Hailuo 2.3 can generate competent shots in seconds, what is left for humans to do?

In practice, the people getting the most out of these tools are not the ones pressing “generate” at random. They are the ones who:

- Come in with a clear brief, script or mood board

- Understand pacing, framing and rhythm from years of watching and making video

- Treat AI clips as raw material, not a finished film

- Build their own ethical lines about what they will and will not generate

The technology is impressive, but it still struggles with long-form narrative, subtle cause-and-effect and truly original staging. That’s where human judgement, taste and lived experience remain hard to replace.

What the near future looks like

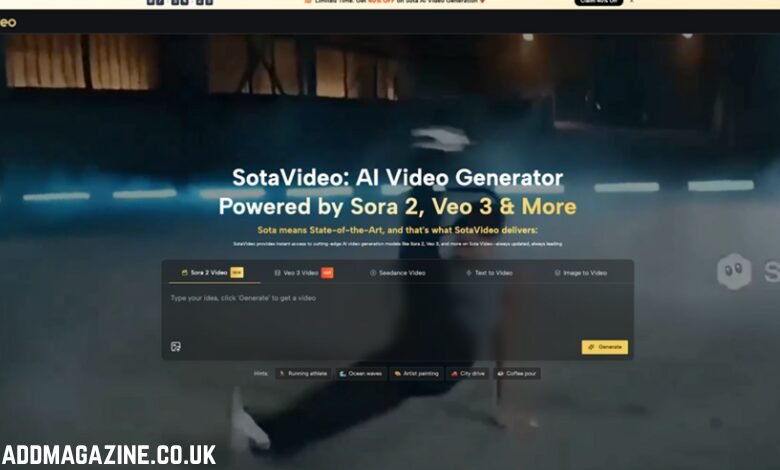

As models such as Hailuo 2.3, Kling 2.6 and the next generation of Sora-style systems continue to mature, the boundaries between “shot in camera” and “generated in the cloud” will keep blurring. We’re already seeing:

- Hybrid adverts where only the product is real and the world around it is synthesised

- Influencers testing wild concepts with AI before investing in full productions

- Indie filmmakers using AI-generated pre-viz to pitch ideas and secure funding

For most readers, the question is not “Will AI replace directors?” but “How comfortable am I using these tools in my own work or personal life?” The sensible answer sits somewhere in the middle: use them as accelerators, not as a substitute for your own taste or ethics.

A practical checklist before you hit “publish”

Whether you’re experimenting with Hailuo 2.3 clips, using a face-swap tool on your holiday videos, or trying to give your side-hustle brand a more polished look, a quick pre-flight check is worth building into your routine:

- Do I have the right to use every logo, character and likeness in this video?

- Could this clip be mistaken for a genuine news report, security camera or official advert?

- If I’m replacing a face, is the person aware and comfortable with it?

- Am I clear about which parts were AI-generated if someone asks?

Handled thoughtfully, these tools don’t have to cheapen video; they can raise the floor, letting more people play with ideas that were previously locked behind budgets, crews and studio access.

The models will keep improving. The real differentiator will be the humans who learn to use them carefully – and creatively.